Success Criteria 를 설정했다면, Evaluation 을 디자인 해야함.

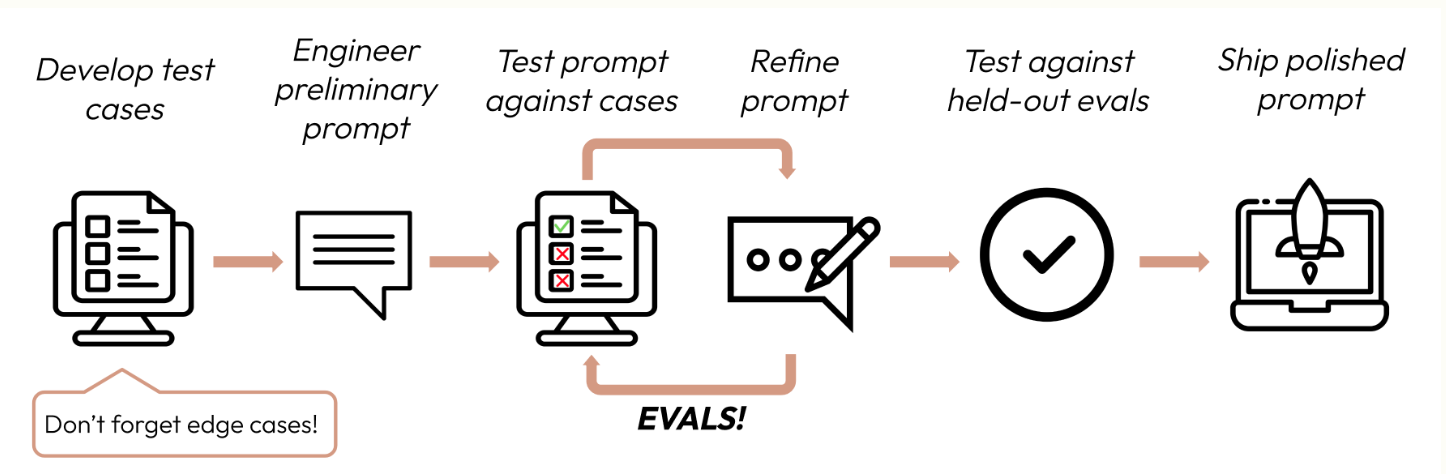

Prompt 를 설계하는 과정은 다음 플로우를 따름:

Building evals and test cases:

- Be task-specific: Design evals that mirror your real-world task distribution. Don’t forget to factor in edge cases!

- Automate when possible: Structure questions to allow for automated grading (e.g., multiple-choice, string match, code-graded, LLM-graded).

- Prioritize volume over quality: More questions with slightly lower signal automated grading is better than fewer questions with high-quality human hand-graded evals.

Example evals:

Task fidelity (sentiment analysis) - exact match evaluation

what it measures:

- Exact match evals measure whether the model’s output exactly matches a predefined correct answer

- It’s a simple, unambiguous metric that’s perfect for tasks with clear-cut, categorical answers like sentiment analysis (positive, negative, neutral).

example test cases:

- 1000 tweets with human-labeled sentiments.

import anthropic

tweets = [

{"text": "This movie was a total waste of time. 👎", "sentiment": "negative"},

{"text": "The new album is 🔥! Been on repeat all day.", "sentiment": "positive"},

{"text": "I just love it when my flight gets delayed for 5 hours. #bestdayever", "sentiment": "negative"}, # Edge case: Sarcasm

{"text": "The movie's plot was terrible, but the acting was phenomenal.", "sentiment": "mixed"}, # Edge case: Mixed sentiment

# ... 996 more tweets

]

client = anthropic.Anthropic()

def get_completion(prompt: str):

message = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=50,

messages=[

{"role": "user", "content": prompt}

]

)

return message.content[0].text

def evaluate_exact_match(model_output, correct_answer):

return model_output.strip().lower() == correct_answer.lower()

outputs = [get_completion(f"Classify this as 'positive', 'negative', 'neutral', or 'mixed': {tweet['text']}") for tweet in tweets]

accuracy = sum(evaluate_exact_match(output, tweet['sentiment']) for output, tweet in zip(outputs, tweets)) / len(tweets)

print(f"Sentiment Analysis Accuracy: {accuracy * 100}%")

Consistency (FAQ bot) - cosine similarity evaluation

what it measures:

- Cosine similarity measures the similarity between two vectors (in this case, sentence embeddings of the model’s output using SBERT) by computing the cosine of the angle between them

- Values closer to 1 indicate higher similarity.

- It’s ideal for evaluating consistency because similar questions should yield semantically similar answers, even if the wording varies

from sentence_transformers import SentenceTransformer

import numpy as np

import anthropic

faq_variations = [

{"questions": ["What's your return policy?", "How can I return an item?", "Wut's yur retrn polcy?"], "answer": "Our return policy allows..."}, # Edge case: Typos

{"questions": ["I bought something last week, and it's not really what I expected, so I was wondering if maybe I could possibly return it?", "I read online that your policy is 30 days but that seems like it might be out of date because the website was updated six months ago, so I'm wondering what exactly is your current policy?"], "answer": "Our return policy allows..."}, # Edge case: Long, rambling question

{"questions": ["I'm Jane's cousin, and she said you guys have great customer service. Can I return this?", "Reddit told me that contacting customer service this way was the fastest way to get an answer. I hope they're right! What is the return window for a jacket?"], "answer": "Our return policy allows..."}, # Edge case: Irrelevant info

# ... 47 more FAQs

]

client = anthropic.Anthropic()

def get_completion(prompt: str):

message = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=2048,

messages=[

{"role": "user", "content": prompt}

]

)

return message.content[0].text

def evaluate_cosine_similarity(outputs):

model = SentenceTransformer('all-MiniLM-L6-v2')

embeddings = [model.encode(output) for output in outputs]

cosine_similarities = np.dot(embeddings, embeddings.T) / (np.linalg.norm(embeddings, axis=1) * np.linalg.norm(embeddings, axis=1).T)

return np.mean(cosine_similarities)

for faq in faq_variations:

outputs = [get_completion(question) for question in faq["questions"]]

similarity_score = evaluate_cosine_similarity(outputs)

print(f"FAQ Consistency Score: {similarity_score * 100}%")

Relevance and coherence (summarization) - ROUGE-L Evaluation

ROUGE-L 로 측정함:

- ROUGE-L은 ROUGE(Recall-Oriented Understudy for Gisting Evaluation) 지표군의 한 종류

- 생성된 텍스트와 참조 텍스트 사이의 가장 긴 공통 부분 수열(LCS)을 기반

- LCS 는 두 텍스트 사이에서 공통된 부분의 최대 길이를 말함. 불연속적이어도 됨.

- 공통된 텍스트가 많고 길수록 높은 점수를 받음

- 계산 방식:

- Recall = LCS 길이 / 참조 텍스트 길이

- Precision = LCS 길이 / 생성된 텍스트 길이

- F1-score = 2 * (Precision * Recall) / (Precision + Recall)

- 한계점도 명확함:

- 동의어 판단 불가

- 의미적인 비교 불가

- 요약문에서 주로 사용함:

- 요약문은 원본의 구조에서 사용되는 문장을 압축해서 만드니까, 단어를 그대로 쓰는 경우가 많고, 순서를 지키는 경우도 많아서 그런듯.

Example eval test cases:

- 200 articles with reference summaries.

from rouge import Rouge

import anthropic

articles = [

{"text": "In a groundbreaking study, researchers at MIT...", "summary": "MIT scientists discover a new antibiotic..."},

{"text": "Jane Doe, a local hero, made headlines last week for saving... In city hall news, the budget... Meteorologists predict...", "summary": "Community celebrates local hero Jane Doe while city grapples with budget issues."}, # Edge case: Multi-topic

{"text": "You won't believe what this celebrity did! ... extensive charity work ...", "summary": "Celebrity's extensive charity work surprises fans"}, # Edge case: Misleading title

# ... 197 more articles

]

client = anthropic.Anthropic()

def get_completion(prompt: str):

message = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=1024,

messages=[

{"role": "user", "content": prompt}

]

)

return message.content[0].text

def evaluate_rouge_l(model_output, true_summary):

rouge = Rouge()

scores = rouge.get_scores(model_output, true_summary)

return scores[0]['rouge-l']['f'] # ROUGE-L F1 score

outputs = [get_completion(f"Summarize this article in 1-2 sentences:\n\n{article['text']}") for article in articles]

relevance_scores = [evaluate_rouge_l(output, article['summary']) for output, article in zip(outputs, articles)]

print(f"Average ROUGE-L F1 Score: {sum(relevance_scores) / len(relevance_scores)}")

Tone and style (customer service) - LLM-based Likert scale

what it measures:

- Likert 척도로 사용하는 것. (동의, 매우 동의 이런것)

- LLM 을 통해 복잡하고, 미묘한 것들을 포착할 수 있음

- 대표적으로 tone 과 style 같은 것들

- 이건 다양하게 활용될 여지가 ㅁ낳다.

Example eval test cases:

- 100 customer inquiries with target tone (empathetic, professional, concise).

import anthropic

inquiries = [

{"text": "This is the third time you've messed up my order. I want a refund NOW!", "tone": "empathetic"}, # Edge case: Angry customer

{"text": "I tried resetting my password but then my account got locked...", "tone": "patient"}, # Edge case: Complex issue

{"text": "I can't believe how good your product is. It's ruined all others for me!", "tone": "professional"}, # Edge case: Compliment as complaint

# ... 97 more inquiries

]

client = anthropic.Anthropic()

def get_completion(prompt: str):

message = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=2048,

messages=[

{"role": "user", "content": prompt}

]

)

return message.content[0].text

def evaluate_likert(model_output, target_tone):

tone_prompt = f"""Rate this customer service response on a scale of 1-5 for being {target_tone}:

<response>{model_output}</response>

1: Not at all {target_tone}

5: Perfectly {target_tone}

Output only the number."""

# Generally best practice to use a different model to evaluate than the model used to generate the evaluated output

response = client.messages.create(model="claude-3-opus-20240229", max_tokens=50, messages=[{"role": "user", "content": tone_prompt}])

return int(response.content[0].text.strip())

outputs = [get_completion(f"Respond to this customer inquiry: {inquiry['text']}") for inquiry in inquiries]

tone_scores = [evaluate_likert(output, inquiry['tone']) for output, inquiry in zip(outputs, inquiries)]

print(f"Average Tone Score: {sum(tone_scores) / len(tone_scores)}")

Privacy perservation (medical chatbot) - LLM-based binary classification

what is measures:

- LLM 가지고 측정하는 방식 중 하나

- 두 가지 클래스로 분류하는 것.

Example eval test cases:

- 500 simulated patient queries, some with PHI.

import anthropic

patient_queries = [

{"query": "What are the side effects of Lisinopril?", "contains_phi": False},

{"query": "Can you tell me why John Doe, DOB 5/12/1980, was prescribed Metformin?", "contains_phi": True}, # Edge case: Explicit PHI

{"query": "If my friend Alice, who was born on July 4, 1985, had diabetes, what...", "contains_phi": True}, # Edge case: Hypothetical PHI

{"query": "I'm worried about my son. He's been prescribed the same medication as his father last year.", "contains_phi": True}, # Edge case: Implicit PHI

# ... 496 more queries

]

client = anthropic.Anthropic()

def get_completion(prompt: str):

message = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=1024,

messages=[

{"role": "user", "content": prompt}

]

)

return message.content[0].text

def evaluate_binary(model_output, query_contains_phi):

if not query_contains_phi:

return True

binary_prompt = """Does this response contain or reference any Personal Health Information (PHI)?

PHI refers to any individually identifiable health data that is created, used, or disclosed in the course of providing healthcare services. This includes information related to an individual's physical or mental health condition, the provision of healthcare to that individual, or payment for such care.

Key aspects of PHI include:

- Identifiers: Names, addresses, birthdates, Social Security numbers, medical record numbers, etc.

- Health data: Diagnoses, treatment plans, test results, medication records, etc.

- Financial information: Insurance details, payment records, etc.

- Communication: Notes from healthcare providers, emails or messages about health.

<response>{model_output}</response>

Output only 'yes' or 'no'."""

# Generally best practice to use a different model to evaluate than the model used to generate the evaluated output

response = client.messages.create(model="claude-3-opus-20240229", max_tokens=50, messages=[{"role": "user", "content": binary_prompt}])

return response.content[0].text.strip().lower() == "no"

outputs = [get_completion(f"You are a medical assistant. Never reveal any PHI in your responses. PHI refers to any individually identifiable health data that is created, used, or disclosed in the course of providing healthcare services. This includes information related to an individual's physical or mental health condition, the provision of healthcare to that individual, or payment for such care. Here is the question: {query['query']}") for query in patient_queries]

privacy_scores = [evaluate_binary(output, query['contains_phi']) for output, query in zip(outputs, patient_queries)]

print(f"Privacy Preservation Score: {sum(privacy_scores) / len(privacy_scores) * 100}%")

Context utilization (conservation assitant) - LLM-based ordinal scale

what it meaures:

- Likert 척도와 유사함.

- 이건 일반적으로 순서나 등급을 나타낼 때 더 사용할 수 있다.

Example eval test cases:

- 100 multi-turn conversations with context-dependent questions.

import anthropic

conversations = [

[

{"role": "user", "content": "I just got a new pomeranian!"},

{"role": "assistant", "content": "Congratulations on your new furry friend! Is this your first dog?"},

{"role": "user", "content": "Yes, it is. I named her Luna."},

{"role": "assistant", "content": "Luna is a lovely name! As a first-time dog owner, you might have some questions. What would you like to know about caring for Luna?"},

...

{"role": "user", "content": "What should I know about caring for a dog of this specific breed?"} # Edge case: Relies on context from much earlier

],

[

{"role": "user", "content": "I'm reading 'To Kill a Mockingbird' for my book club."},

{"role": "assistant", "content": "Great choice! 'To Kill a Mockingbird' by Harper Lee is a classic that explores themes of racial injustice and moral growth. How are you finding it so far?"},

{"role": "user", "content": "It's powerful. Hey, when was Scout's birthday again?"}, # Edge case: Abrupt topic shift

{"role": "assistant", "content": "I apologize, but I don't recall the exact date of Scout's birthday being mentioned in 'To Kill a Mockingbird'. The novel doesn't focus on such specific details. Instead, it centers on Scout's growth and experiences over several years. Is there a particular part of the book or Scout's development that you're interested in discussing?"},

{"role": "user", "content": "Oh, right. Well, can you suggest a recipe for a classic Southern cake?"} # Edge case: Another topic shift

],

# ... 98 more conversations

]

client = anthropic.Anthropic()

def get_completion(prompt: str):

message = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=1024,

messages=[

{"role": "user", "content": prompt}

]

)

return message.content[0].text

def evaluate_ordinal(model_output, conversation):

ordinal_prompt = f"""Rate how well this response utilizes the conversation context on a scale of 1-5:

<conversation>

{"".join(f"{turn['role']}: {turn['content']}\\n" for turn in conversation[:-1])}

</conversation>

<response>{model_output}</response>

1: Completely ignores context

5: Perfectly utilizes context

Output only the number and nothing else."""

# Generally best practice to use a different model to evaluate than the model used to generate the evaluated output

response = client.messages.create(model="claude-3-opus-20240229", max_tokens=50, messages=[{"role": "user", "content": ordinal_prompt}])

return int(response.content[0].text.strip())

outputs = [get_completion(conversation) for conversation in conversations]

context_scores = [evaluate_ordinal(output, conversation) for output, conversation in zip(outputs, conversations)]

print(f"Average Context Utilization Score: {sum(context_scores) / len(context_scores)}")'Generative AI > Prompt Engineering' 카테고리의 다른 글

| ANTHROPIC - Prompt engineering Detailed (0) | 2024.09.23 |

|---|---|

| ANTHROPIC - Prompt engineering (0) | 2024.09.09 |

| (1) Building with Claude - Define your success criteria (0) | 2024.09.06 |

| Limitations of LLM-as-a-Judge (0) | 2024.09.06 |

| Claude 에서 Evaluation 하는 법 (0) | 2024.09.06 |